|

I am research engineer at the Mobility and AI laboratory of Nissan Advanced Technology Center. I obtained a Ph.D. and a Masters in Information Sciences at Tohoku University, where I worked at the Human-Robot Informatics laboratory under the supervision of Masashi Konyo. Before my adventure in Japan, I obtained an engineering degree in Electronics at Universidad Mayor de San Andrés, where I was advised by Mauricio Améstegui. |

|

|

I am interested in creating technological solutions that improve people's interaction with data, computers, and robots. I have investigated factors affecting the performance of people using a P300-based Brain-Computer Interface, and I have developed methods based on haptic feedback for improving operator's performance in robot teleoperation and for enriching people's experience of visual media. |

|

Fast playback of First-Person View (FPV) videos reduces watching time but it also increases the perceived intensity of camera trembling and makes transient events, such as collisions, less evident. Here we propose using camera vibrations as vibrotactile feedback to support collision detection in fast video playback. |

|

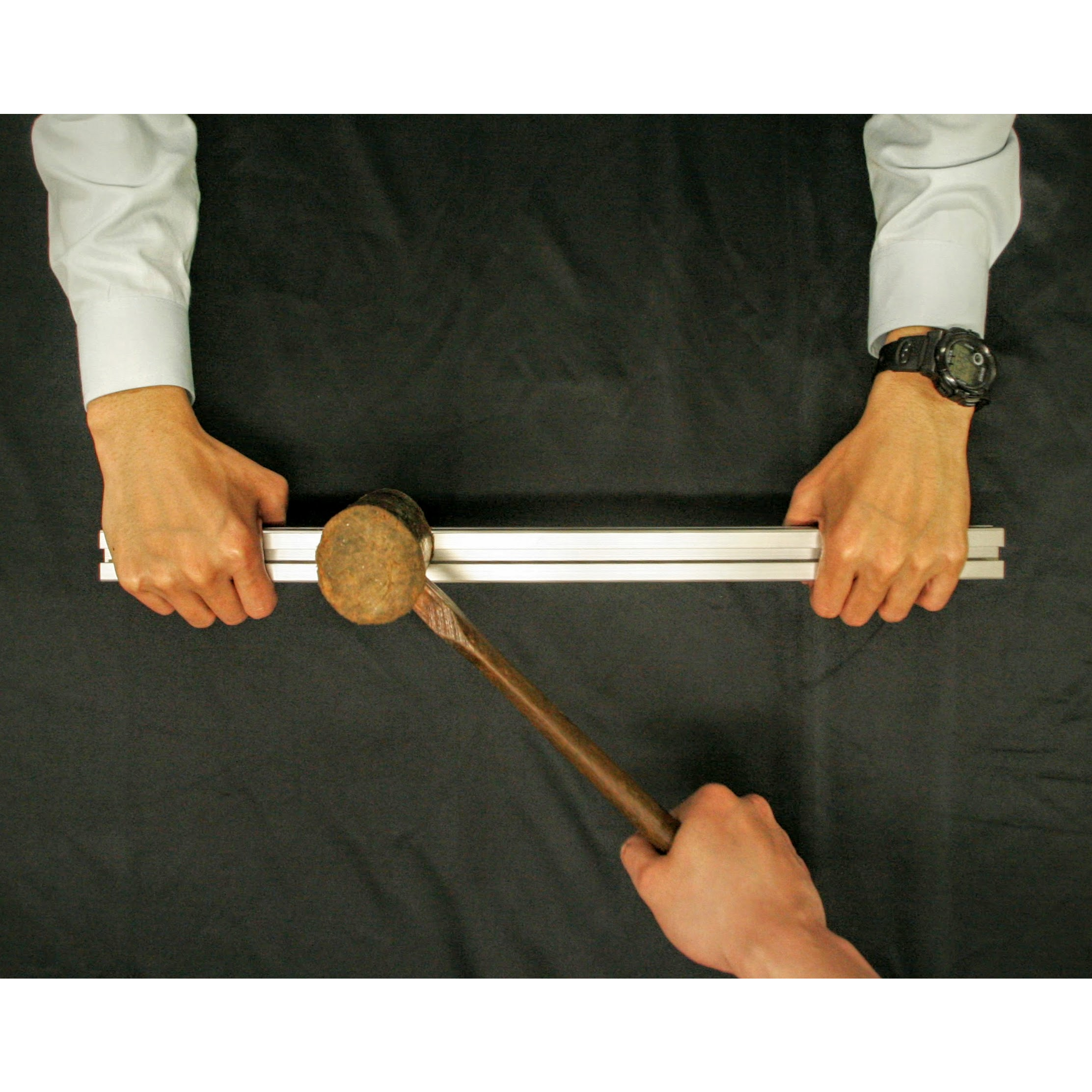

Unnoticed collisions compromise the success of remote exploratory tasks with mobile robots. We propose a vibrotactile stimulation method to represent frontal collisions that is based on the way people perceive impacts on a bar held with both hands. |

|

We propose a vibrotactile rendering method for the motion of a camera in first-person view videos that enables people to feel the movement of the camera with both hands. Concretely, we consider an arrangement of two vibrotactile actuators to render panning movements on the horizontal axis as vibrations that move from hand to hand, and to represent sudden vertical displacements of the camera as transient vibrations on both hands. |

|

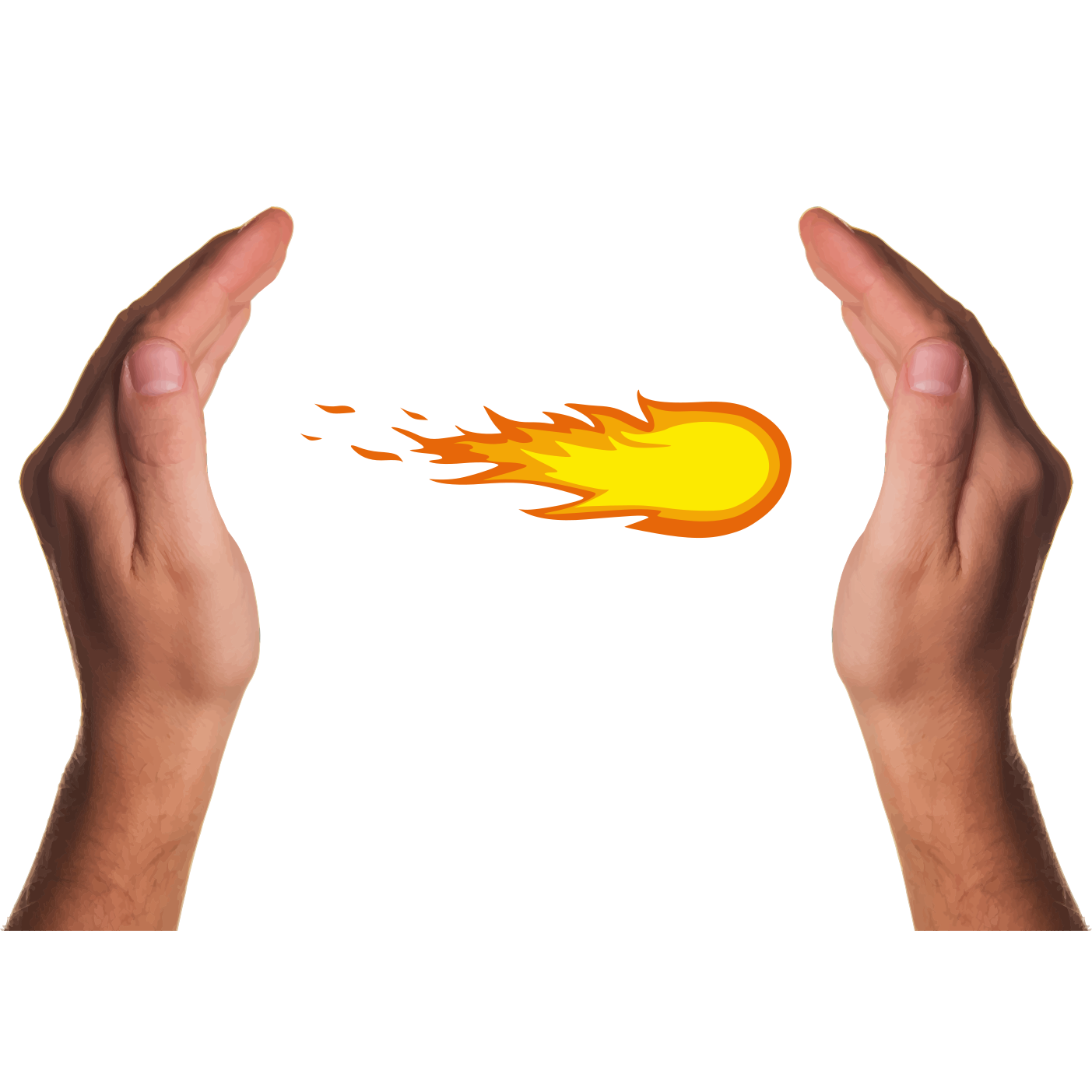

Perceptual illusions enable designers to go beyond hardware limitations to create rich haptic content. Nevertheless, spatio-temporal interactions for thermal displays have not been studied thoroughly. We focus on the apparent motion of hot and cold thermal pulses delivered at the thenar eminence of both hands.

|

|

|

|

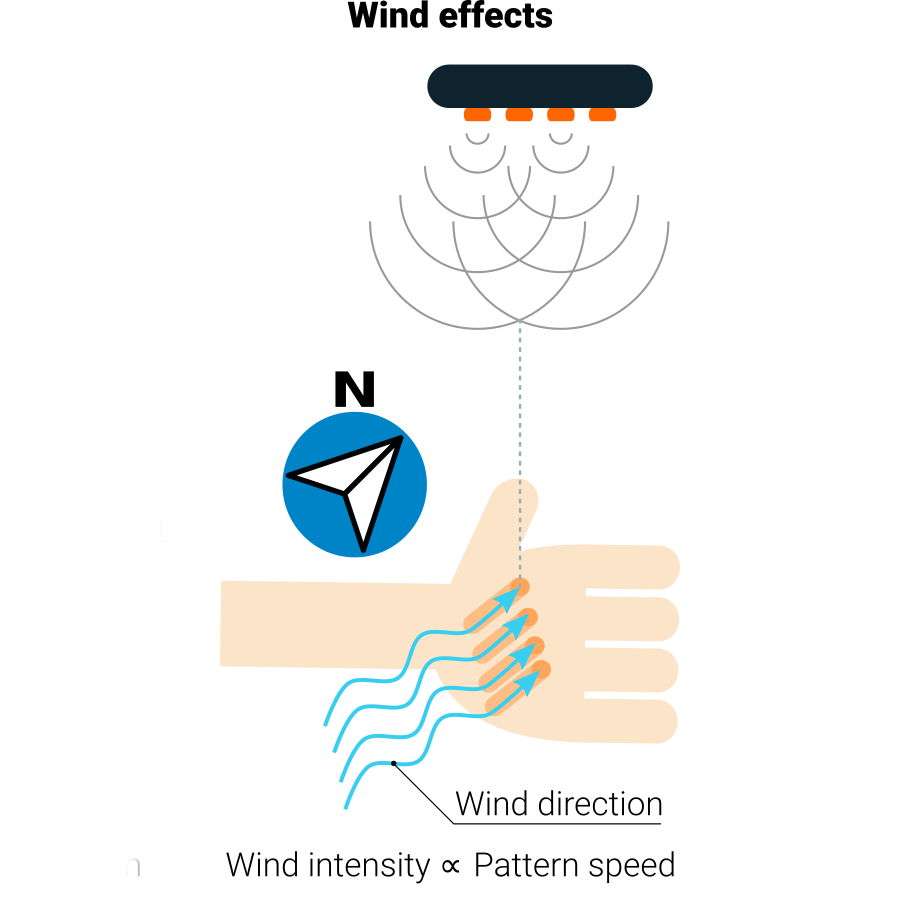

Cao Nan, Daniel Gongora, Horie Arata Student Challenge - Eurohaptics, 2018 Video Weather Telereport is a novel and informative way to check the weather. Weather Telereport consists of a Weather Box where users insert one hand to experience the weather conditions in a place of interest as if their hand had traveled there. Inside the Weather Box lies an ultrasonic haptic display that represents a wide variety of weather conditions on the hands of the users and it also enables users to control the Weather Box with natural gestures. In addition, the Weather Box is equipped with fans and halogen lamps to further increase the immersiveness. Weather Telereport lends itself for quickly checking the weather before leaving home and for exploring the weather around the world at leisure. |

|

Tele Teku-Teku is a shared walking experience. It is a system designed for friends wanting to go for a stroll together but unable to do so because one of them is constrained to a certain place. We use the Vybe haptic gaming pad to provide vibrotactile feedback in sync with the footsteps of a distant friend. One of the users wears vibration sensors and carries an avatar robot equipped with an IMU and a camera, the other wears a Head-Mounted Display and sits on a chair enhanced by the gaming pad. Together these technologies set the stage for a rich and engaging experience. Shall we walk? |

|

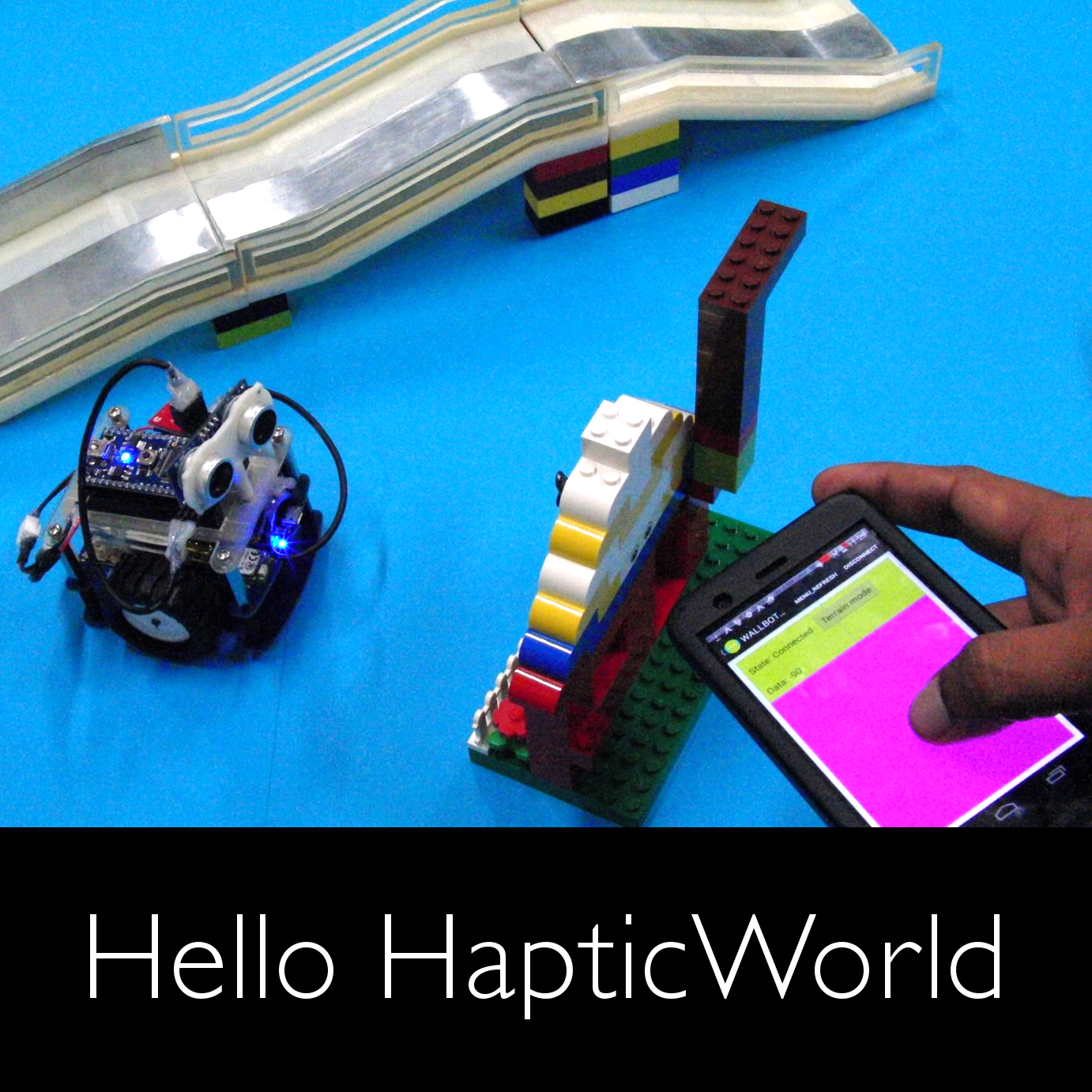

Dennis Babu, Daniel Gongora, Seonghwan Kim, Shunya Sakata Student Challenge - WorldHaptics Conference, 2015 (2nd Place, announcement) Video Hello HapticWorld uses haptic feedback to encourage kids to explore their surroundings. We use a smartphone equipped with a variable friction display to control a small mobile robot. The variable friction display lets the user feel the inclination of the robot and its distance to obstacles ahead. |

|

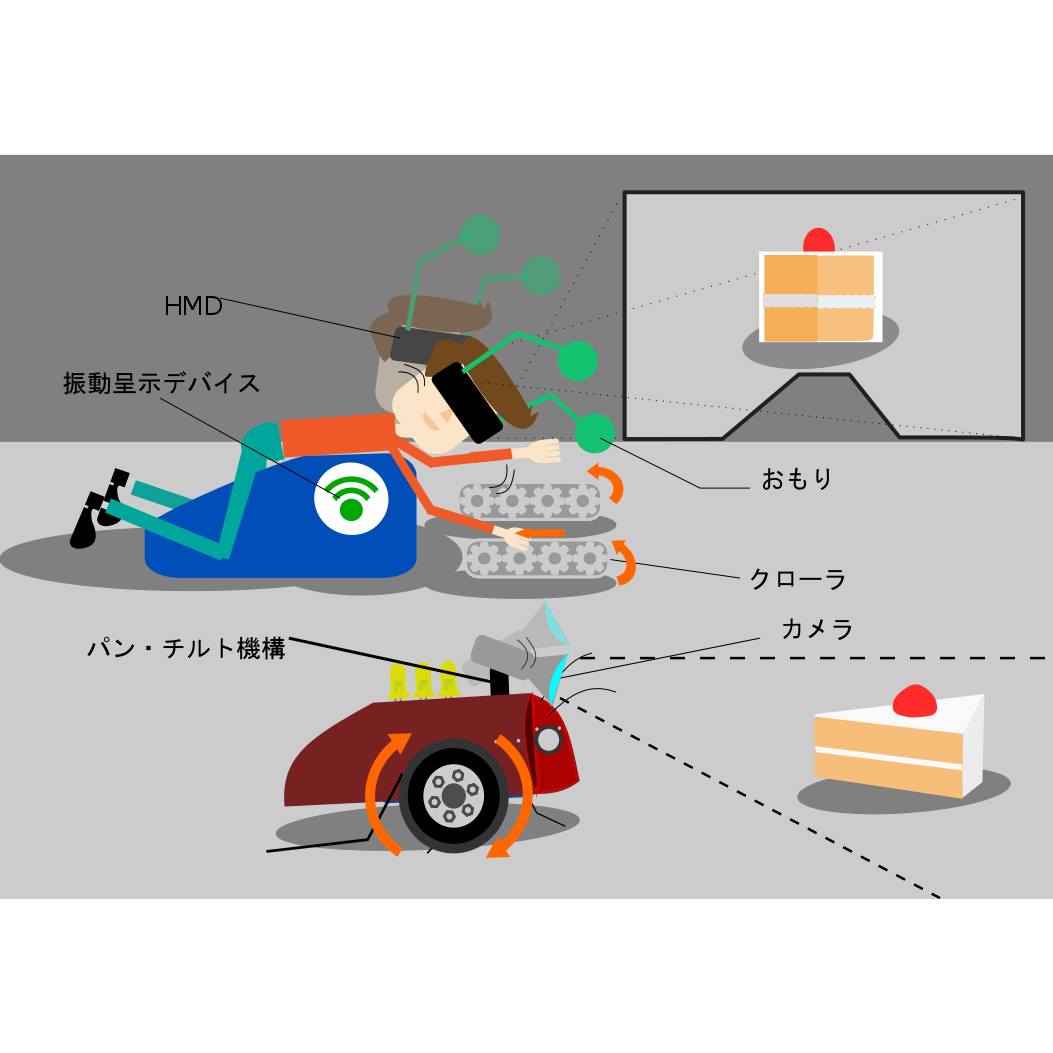

Cockroach-Human Interaction lets participants experience the world from the point of view of a cockroach. Users crawl to control a small mobile robot using a custom input interface built around a pair of conveyor belts. Footsteps near the robot trigger vibrations on the user's chest and a lantern pointed towards the robot causes the camera to shake inducing feelings of agitation and anxiety in the user. |

|

Design: Jon Barron. |